Coordinated Movement Control of Prosthetic limbs

Vision: Wearable motion capture would do for human movement analysis and assistive device control, what internet has done in the domain of text, images and videos.

The function of an assitive device like a prothesis limb or an exoskeleton is to compensate for the missing or injured limbs in the body. Before an assistive device can assist its user, it needs to know the user's current state and their upcoming locomotion needs. Based on sensors fit to the user's body, the device can determine what motion it needs to perform, to support the user.

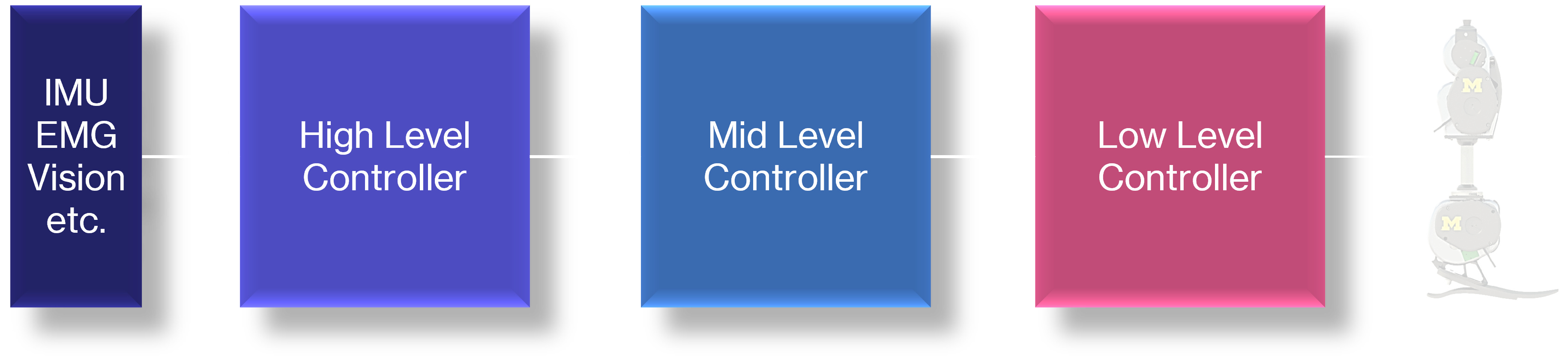

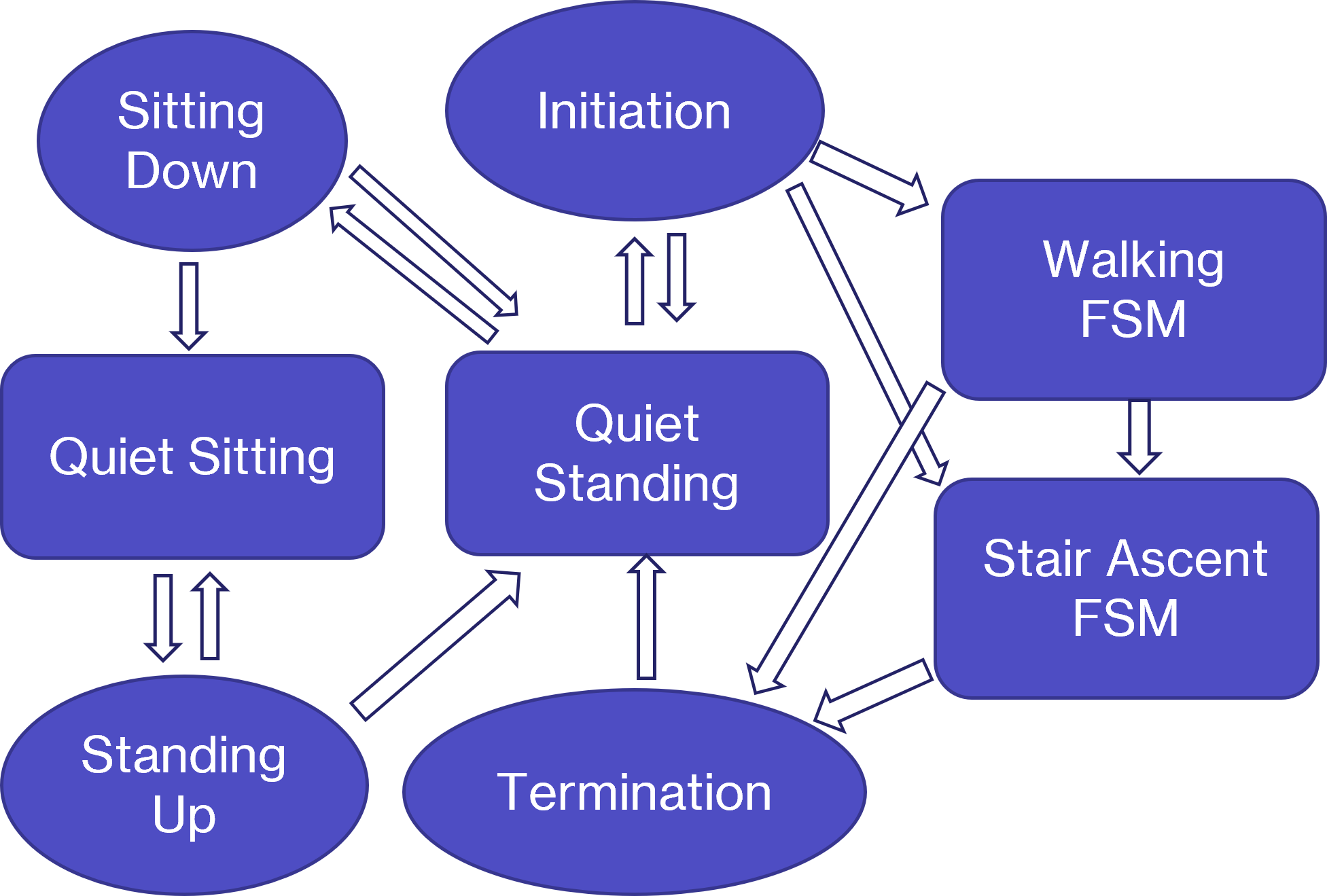

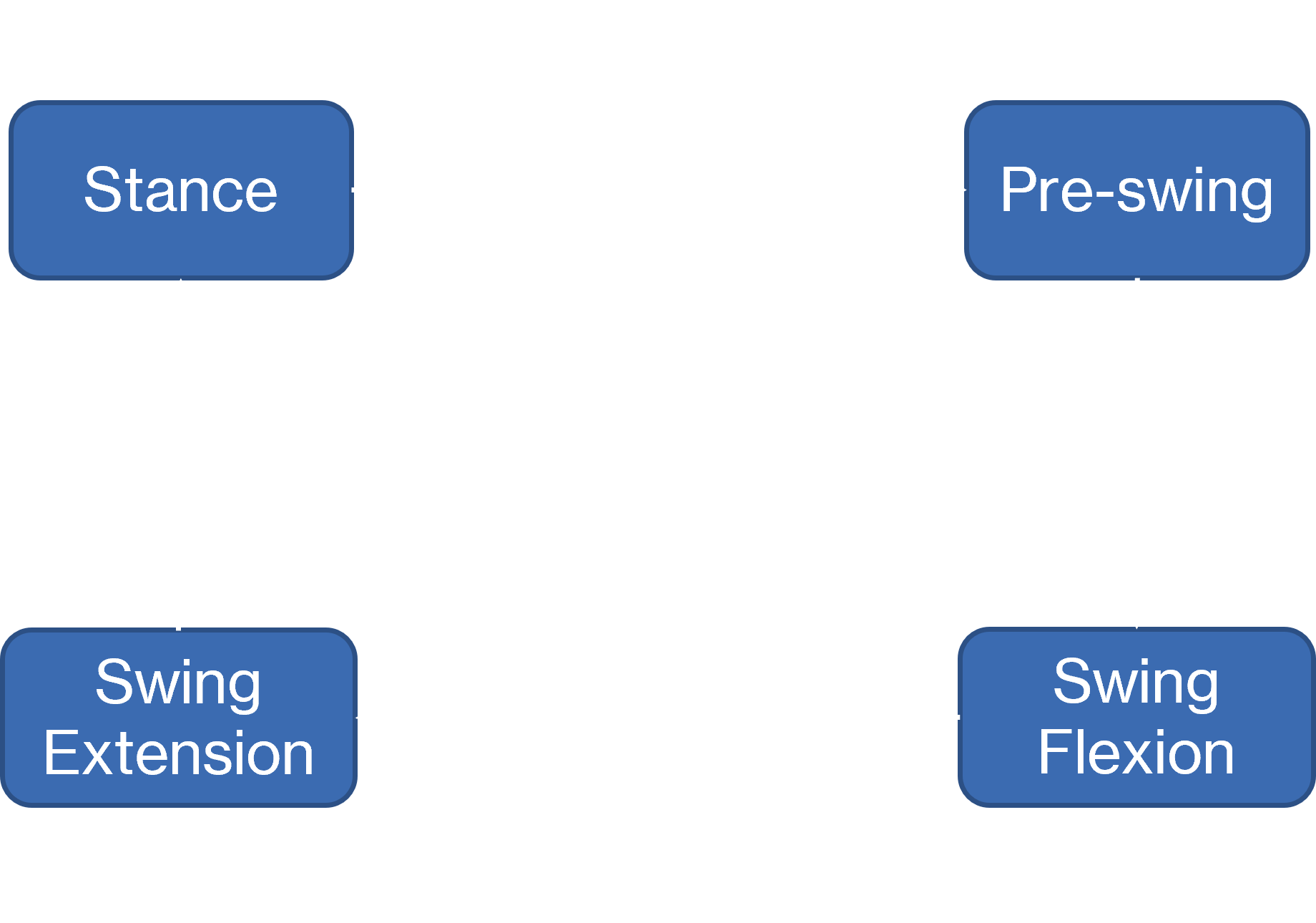

Traditionally, prosthetic controllers (the 3 stage controller shown below) are designed by explicitly segmenting the human gait into activities like flatground walking or stair ascent etc., which are implemented using the Purple Finite State Machine (FSM) below, and phases like stance and swing, implemented using the Blue FSM below. The rules for transition between the states of these FSM are usually hand-crafted. Then, pre-defined kinematics or impedance trajectories for each segment are executed on the prosthetic leg (Using the Pink PID controller) to enable the amputee to walk. However, using pre-defined trajectories presents a challenge to capturing the true complexity of human gait. You'd need infinite such segments to capture most of the variations in human movement.

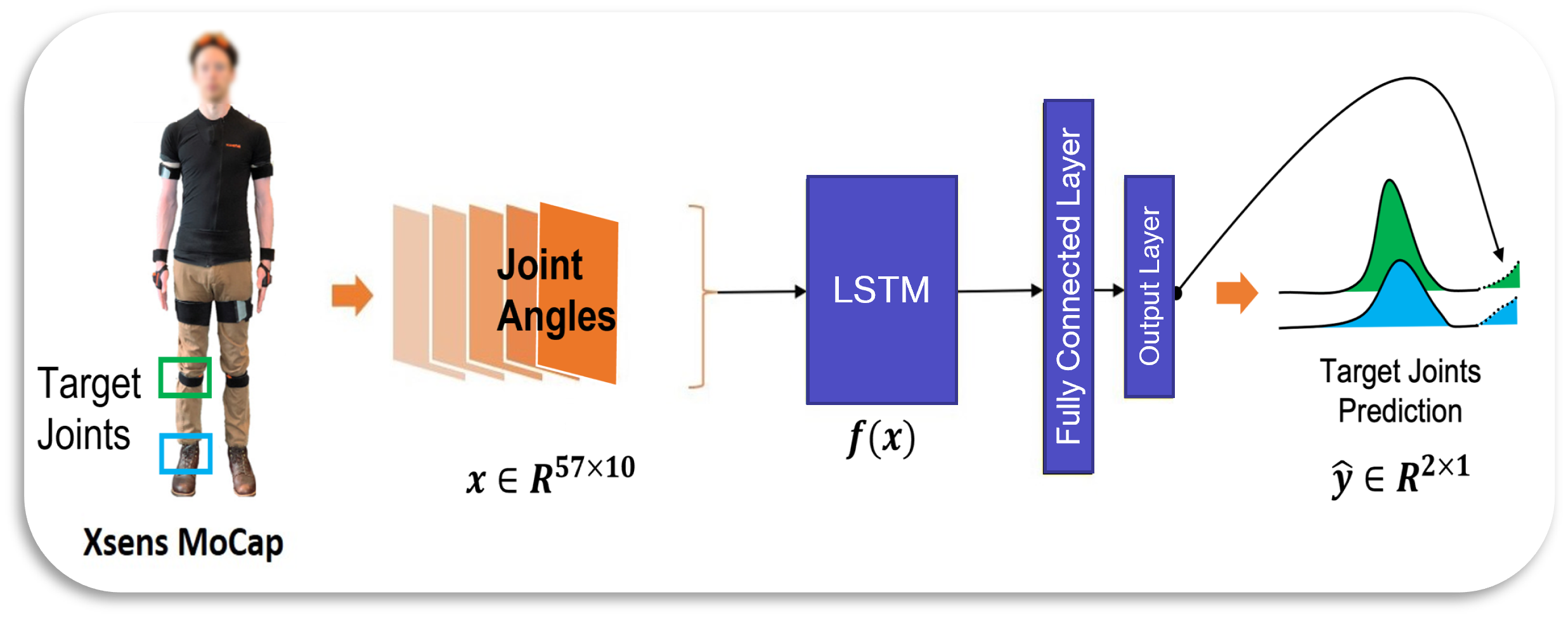

Coordinated movement control is a data-driven approach to this problem. We can imagine all of human movement being generated by a dynamical system similar to the one shown below in the figure (albeit in a much higher dimension and not as deterministic). The axes represent the different degrees of freedom in the body i.e. joint angles and the moving dot represents the current pose of the body. Since, the data exists on a lower-dimensional manifold in the higher dimensioal joint space, we can get information about the missing limbs from the time history of intact limb motion. We can learn a predictive model of this system from vast amounts of data and can use the learned model to estimate reference trajectories for the prosthetic device, from the motion of intact limbs. This is the essence of coordinated movement control.

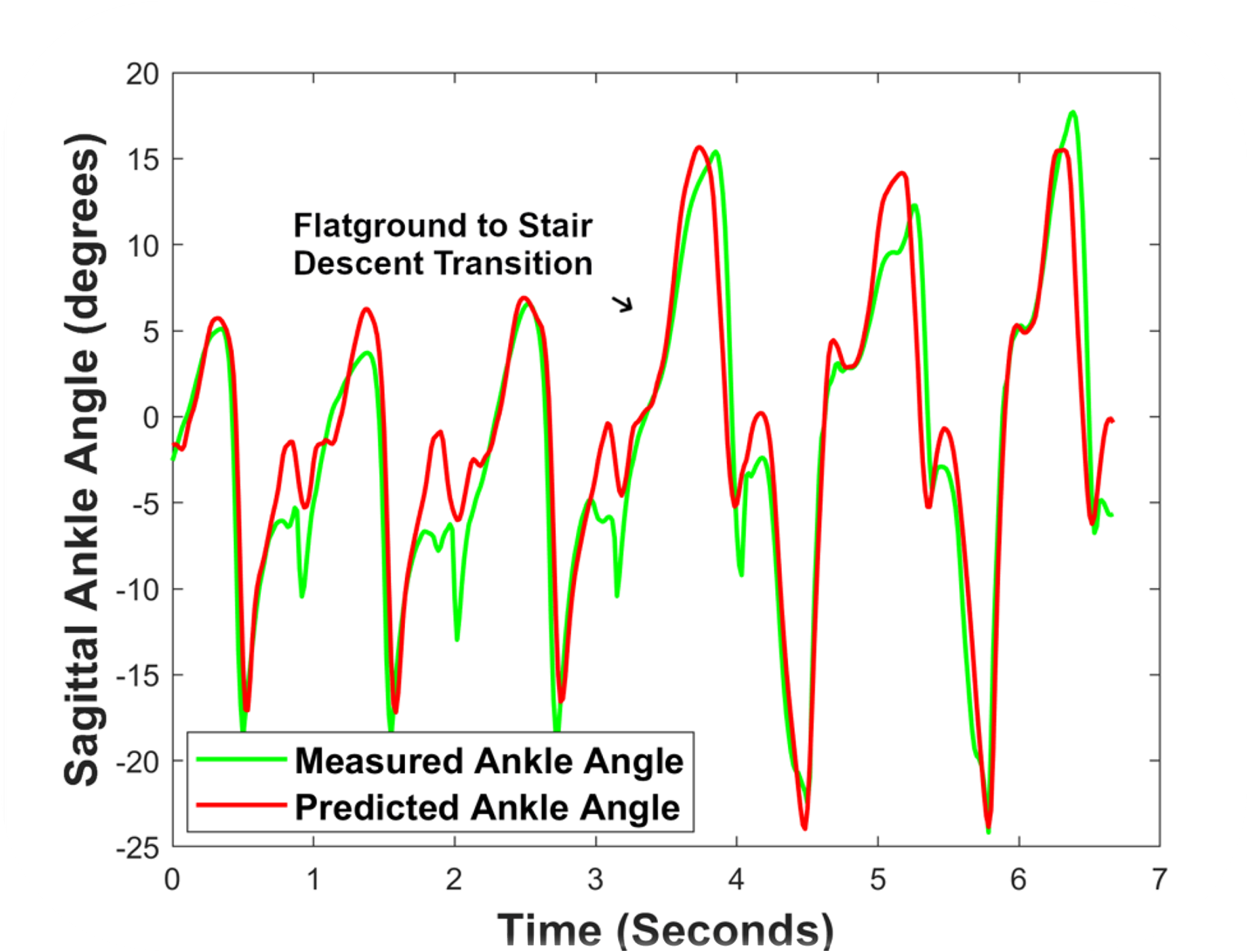

The figure below shows prediction by a single model for 2 different activties and the transition between them, without the need to segment gait into activities or phases.

In Visual Control, we augment the coordinated movement approach with egocentric vision and scale the approach to more complex natural environments.

In the recent years, wearable motion capture systems have become highly accurate and can match marker-based motion capture systems. Wearable motion capture systems allow locomotion data to be collected in more diverse and complex out-of-the-lab scenarios. In the future, we expect wearable sensors to be much more affordable. This would generate human locomotion data on an unprecedented scale, which can be used to learn more expressive predictive models of gait.